Artificial Intelligence (AI) has rapidly transformed the way we live, work, and interact with technology. From personalized recommendations on streaming platforms to autonomous vehicles navigating complex city streets, AI is a driving force behind these innovations. However, as AI systems become increasingly integrated into our lives, there is a growing need for transparency and accountability. This is where Explainable AI, often abbreviated as XAI, steps in to provide clarity and insight into the black box of AI decision-making.

The Black Box Conundrum

Imagine you are applying for a loan, and your application is denied. You ask why, and the response is, “The decision was made by the AI algorithm.” This lack of explanation can leave individuals frustrated and distrustful of AI systems. It’s akin to asking a magician to reveal their tricks, and the magician responding with silence. This opacity in AI decision-making has significant implications, especially in areas like finance, healthcare, and criminal justice.

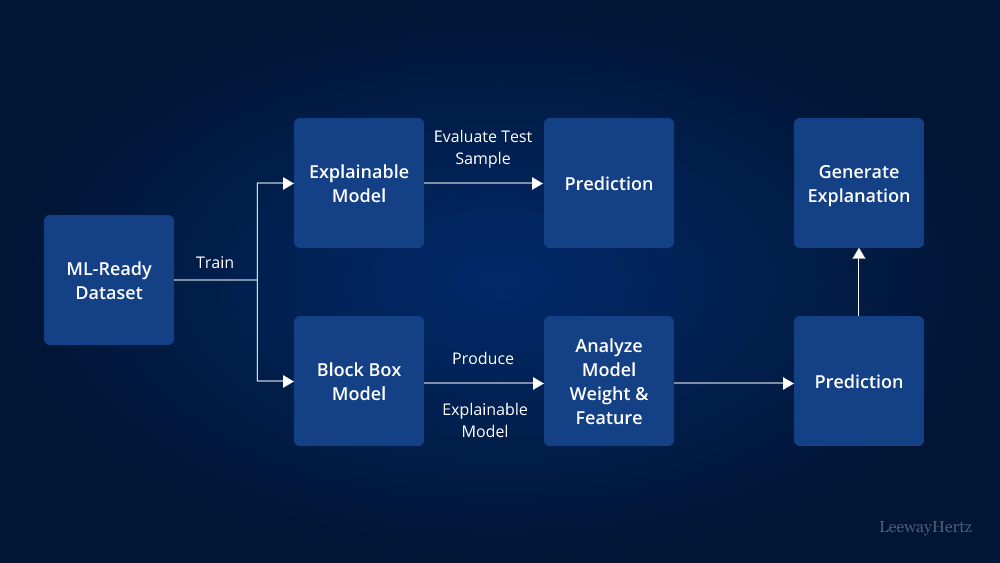

Traditional machine learning models, like deep neural networks, are often seen as black boxes. They process data through layers of complex mathematical operations, making it challenging to understand how and why they arrive at a particular outcome. This lack of transparency can lead to biased or unfair decisions, reinforcing existing inequalities and undermining trust in AI technologies.

The Need for Explainable AI

Explainable AI seeks to bridge this gap by making AI systems more interpretable and accountable. It aims to provide human-understandable explanations for AI decisions, ensuring that users can trust and validate the outcomes. Here are several compelling reasons why Explainable AI is essential:

- Trust and Accountability: When AI systems can explain their decisions, users, regulators, and stakeholders gain confidence in the technology. This trust is vital for the widespread adoption of AI in critical domains like healthcare and finance.

- Bias Mitigation: XAI tools can help identify and rectify biases within AI models. By understanding how decisions are made, it becomes easier to address and rectify instances of unfairness.

- Regulatory Compliance: With regulations like GDPR and AI ethics guidelines becoming more prevalent, organizations need to demonstrate compliance. XAI tools can aid in meeting these requirements by providing transparency and documentation.

- Human-AI Collaboration: In fields like medicine, where AI assists healthcare professionals in diagnosis and treatment recommendations, explainable AI can foster better collaboration between humans and machines. Doctors can trust AI recommendations if they understand the rationale behind them.

- Debugging and Improvement: XAI can help developers identify and fix issues within AI models. When something goes wrong, explainable AI can pinpoint the source of the problem, making troubleshooting more efficient.

Methods of Achieving Explainable AI

There are various techniques and methods to achieve explainable AI. Here are some prominent approaches:

- Feature Importance: This method involves ranking the importance of input features in making a decision. It helps users understand which factors had the most significant influence on the AI’s output.

- Local Explanations: Local interpretable models, such as LIME (Local Interpretable Model-Agnostic Explanations), create simplified models around individual predictions. These models are easier to understand than complex neural networks.

- Rule-Based Systems: These systems generate decision rules that provide a clear explanation of how an AI system reached a specific outcome. This approach is often used in applications like credit scoring.

- Visualizations: Data visualization techniques can transform complex AI processes into easily digestible graphics. These visualizations can help users comprehend the inner workings of AI models.

- Natural Language Explanations: Generating natural language explanations for AI decisions is a powerful way to make AI more transparent and user-friendly. It enables AI systems to explain their reasoning in plain language.

Challenges in Implementing Explainable AI

While the concept of Explainable AI is promising, it is not without its challenges. Some of the hurdles in implementing XAI include:

- Trade-Offs: There is often a trade-off between the accuracy and explainability of AI models. Simplifying models for better transparency may result in reduced performance.

- Complexity: Developing effective XAI techniques for deep learning models, which are prevalent in AI applications, is a complex task. Balancing complexity and interpretability is an ongoing challenge.

- Scalability: Ensuring that XAI techniques are scalable to handle large datasets and complex AI systems is crucial for their practical use.

- User Comprehension: While XAI can provide explanations, ensuring that these explanations are truly understandable to the end-users, especially non-technical individuals, remains a challenge.

The Future of Explainable AI

As AI continues to evolve and permeate various aspects of our lives, the demand for transparency and accountability will only grow. Explainable AI is poised to play a pivotal role in shaping the future of AI technology. By making AI systems more transparent, accountable, and understandable, XAI can help build trust, mitigate biases, and foster collaboration between humans and machines.

In conclusion, Explainable AI represents a crucial step in demystifying the world of artificial intelligence. It is not only a technological advancement but also a means to ensure that AI benefits society in an equitable and responsible manner. As we continue to unlock the potential of AI, the power of understanding why and how AI systems make decisions cannot be overstated. With Explainable AI, we can turn the black box into a transparent window, allowing us to harness the full potential of this transformative technology.